Cover painting by Osman Hamdi Bey

Intelligence is a characteristic unique to humans. Artificial intelligence (AI) is the name we give to a new technology that humans have developed and likened to themselves. Humans have created this powerful technology and also have the responsibility and intelligence to manage it.

We were inspired by Osman Hamdi Bey's " Tortoise Trainer " for the title. It evokes the idea that a conscious human can train pretty much anything. That's why we regard artificial intelligence users as AI trainers.

You don’t have to be a tech expert to be an AI trainer. We believe that everyone has an AI trainer role that varies based on their expertise and position.

We have prepared examples and suggestions to better explain everyone's different tasks. The Why, Decision Support Principle, and Management with AI Trainers.

Part 1 - The Why

The general AI solutions we see today can perform analysis, prediction, and automation to the extent that algorithmic assumptions are successful. No matter how successful they are, there is always a margin of error that must be taken into account.

The next level, generative artificial intelligence (GenAI), unlike general AI, “dreams” on algorithmic assumptions. Machine dreams are different from human dreams and can make big mistakes when left unchecked. ( The biggest generative AI blunders of 2023 )

Cases of Autonomous Artificial Intelligence

Even if the new solutions were sophisticated and cutting-edge, they would not be used if they did not create value for people.

Amazon’s $3 billion-plus investment in the retail GO AI solution, no matter how talented it is, has revealed that we still need humans at shopping decision points. ( So, Amazon’s ‘AI-powered’ cashier-free shops use a lot of … humans. Here’s why that shouldn’t surprise you ) People don’t want to waste time shopping. However, they find it inconvenient to completely hand over control of the products they buy and the money they spend to artificial intelligence.

Another area of misalignment is the topic of self-driving cars. Although driverless technology is almost technically possible, when we look at the details, expectations for personal use of autonomous vehicles are decreasing. (Partially autonomous cars forecast to comprise 10% of new vehicle sales by 2030) As irrational as it may seem, people may not want to hand over complete control of their car to artificial intelligence. Individual users enjoy the sense of control that comes with driving. On the other hand, we believe that autonomous driving will develop more rapidly in commercial applications where individual pleasure is not a factor.

Machines Predicting Humans

Artificial intelligence will always have a margin of error when predicting human behavior. This is because humans often make emotional or irrational decisions for reasons we cannot understand beforehand. (Dan Ariely on 10 Irrational Human Behaviors) This human quality that differentiates us from machines can always keep us unique. Rather than saying that artificial intelligence can predict the future, it would be more accurate to say that it makes algorithmic assumptions.

An example of how these assumptions can clash with reality comes from the real estate sector.

Zillow, a real estate technology company, experienced a $304 million loss in the third quarter of 2021 due to high error rates in its AI algorithm for predicting home prices. Zillow ended up buying homes at prices above market value. (Zillow’s home-buying debacle shows how hard it is to use AI to value real estate) Real estate technology strategist Mike DelPrete summarized the issue: “Accurately predicting real estate prices without any margin for error is nearly impossible because home buying decisions are driven by emotions and behaviors that vary greatly from person to person.

When decision-making responsibility is taken away from humans, the number of problems caused by artificial intelligence may increase further.

When AI is positioned to support decision-making rather than replace it, the results tend to be more positive.

Part 2 - Decision Support Principle

No matter how sophisticated an artificial intelligence solution may be, it still relies on predetermined algorithm-based predictions. When it supports decision-making rather than replacing it, it can produce more successful results. (Knowledge at Wharton - What Impact Will AI Have on Organizations? – Bob Meyer & Roger Gu | AI in Focus Series)

Amazon GO autonomous grocery shopping may fail. However, express checkout lanes in physical stores or online grocery shopping alternatives find a middle ground by saving time without taking away people's decision-making control. Today, autonomous driving may not be a real need for individuals. However, remotely controlled autonomous moving objects, drones, and autonomous commercial fleet solutions represent a genuine demand. (Workinlot - Global Entrepreneurship Congress 2018 Istanbul - Future of mobility workshop) It doesn't make sense for artificial intelligence to determine real estate values, but it's possible to use superhuman data scanning capabilities to support human pricing decisions. When chatbots guide customers with decisions on critical issues, it can create problems. Understanding the customer, rule-based guidance for simple decisions, and directing to managers for critical decisions represents a successfully used middle ground."

A final example supporting this finding comes from our field. Major consulting firms question AI's ability to make human-equivalent decisions.

A Harvard Business School and BCG collaborative study revealed that consultants using GPT-4 AI were more productive and efficient across various tasks. According to the study, consultants with AI assistance completed 12% more tasks and 25% faster. Performance among new consultants increased by 43%, while experienced consultants also saw a 17% improvement. (Harvard And BCG Unveil The Double-Edged Sword Of AI In The Workplace)

Despite these gains, Generative AI's tendency to generate "hallucinations based on algorithmic assumptions" in areas where it lacked data significantly increased consultants' error rates. Consultants using AI had a 19% higher likelihood of making mistakes due to these hallucinations.

The research concludes that AI is highly valuable when properly trained for simple but numerous decision points. However, it is very risky on its own in situations where decision-making is critical. In critical decisions, the human capacity for complex and sometimes inexplicably intuitive evaluation is unrivaled.

By positioning artificial intelligence as a decision support tool, we can train it to serve humans. To do this, we also need to decide how to manage it.

Part 3 AI Trainers and Governance

In every different field where artificial intelligence is used, non-technical expertise is also needed.

These experts are responsible for ensuring that AI solutions are ethical, practical, and safe for both users and employees. With members possessing different areas of expertise, appropriate processes for AI development and governance are required.

.jpg)

We developed the process outline above to provide a more concrete interpretation of AI management responsibilities.

- Determining the Area of Use: Defining use cases by listing problems to be solved with AI, prioritizing them, and documenting expected outcomes and risks.

- Stakeholder Identification: Determining internal and external stakeholders for collaboration, whether using a custom or pre-built algorithm, and clarifying expectations regarding development processes and intellectual property rights.

- Data: Identifying the data required for solution development, preparing it for model training, and documenting the process.

- Testing and Iteration: Conducting PoC (Proof of Concept) tests before final deployment, addressing identified risks, and developing success metrics for ongoing monitoring.

- Implementation: Ensuring end-user experiences are auditable and reportable while evaluating scaling opportunities.

Artificial intelligence management hierarchies and processes will vary depending on the organization. The common reality is that corporate use of artificial intelligence has an increasingly growing trend.

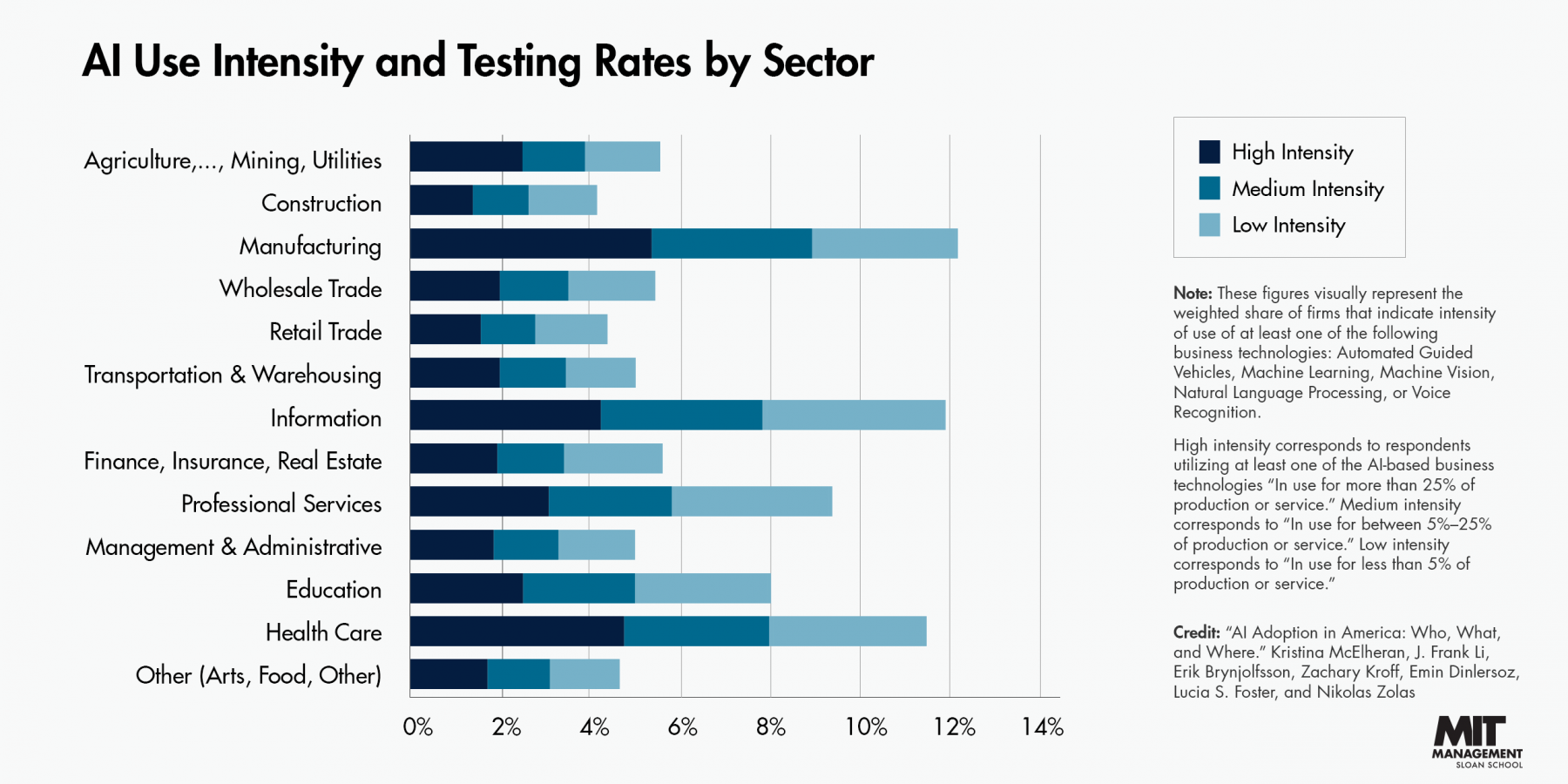

According to a study by the MIT Sloan School of Management, over 50% of companies with more than 5,000 employees and more than 60% of companies with over 10,000 employees are using AI today. (The who, what, and where of AI adoption in America)

As we navigate the digital landscape's vast data repositories, many of us are incorporating AI solutions into our workflows. Collectively, we bear the responsibility of ensuring these systems, shaped through our interactions, remain anchored to their purpose of serving human well-being.

Businesses can implement structured AI governance frameworks. Multidisciplinary teams can contribute to ethical oversight. Users can demand algorithmic transparency from service providers, ensuring technology development prioritizes human wellbeing.

These principles invite ongoing refinement, but one truth remains undeniable: AI will continue accelerating change, and as its influence expands, responsible governance becomes increasingly vital.